Test R in Azure DevOps

I have previously written about how to test R in VSTS.

Now VSTS is called Azure DevOps and I have updated my test pipeline.

The overall idea has not changed:

I provide a Docker container with R where I install my custom package and test it.

The container is run on a hosted computer in DevOps.

But now Linux agents are out of preview and I use “Hosted Ubuntu”.

My build pipeline look like this.

Remember those updated R Docker images I wrote recently about?

I have a Docker image with packages for testing R code for a number of R versions.

Their name/tag are r-test:<R version>.

In the DESCRIPTION file in an R package we should always write which version(s) of R the package will run on in one of these two forms:

R (== MAJOR.MINOR.PATCH)

R (>= MAJOR.MINOR.PATCH)

For the R code I use for more than proof of concept I only use one version of R.

Upgrading to use a new version of R requires IMO at least a bump of the MINOR part of a package.

To extract this number from the DESCRIPTION file I use a little regular expresion matching in the first Bash part called “Extract R version”.

It is the following “Inline script”:

RVERSION=`grep "R (.= [3-9].[0-9].[0-9])" DESCRIPTION | grep -o "[3-9].[0-9].[0-9]"`

echo "R version: $RVERSION"

# Set as Azure DevOps variable to be used in other tasks in the build

echo "##vso[task.setvariable variable=Rversion]$RVERSION"

As noted in the comment we set the Azure DevOps variable Rversion to be equal to the Bash variable RVERSION to access it in other parts of the build pipeline.

The echo is used to print the version to the log for debugging purposes.

Run tests

In the repository with the R package I have a Dockerfile.test that creates a test environment based on r-test.

In the simplest form it looks like this:

ARG R_VERSION

FROM <registry>.azurecr.io/r-test:${R_VERSION}

COPY --chown=shiny:shiny . $HOME/package/

If the R package has system dependencies these are installed before the COPY statement.

The <registry> can also be a build argument like R_VERSION, but I use a single registry for the R images.

In the “Build test image” part I use the following settings.

The “Image name” is not important – we just need it during the tests.

In the “Run tests in image” task I run the freshly created Docker image.

If an image is run using a Docker task it is deleted when it stops (this can be seen by looking a the log – the Docker task use a --rm flag).

I need to copy test results from the container when it stops and therefore run the container with a Bash task.

Get test results

The task “Copy test results from test image” is a Bash task that copies test-results.xml and coverage.xml from the last run container to the host.

CONTAINERID=`docker ps -alq`

docker container cp $CONTAINERID:/home/shiny/package/test-results.xml .

docker container cp $CONTAINERID:/home/shiny/package/coverage.xml .

Finally test-results.xml and coverage.xml are copied to DevOps using the standard tasks.

In DevOps a summary looks like this:

And the test tab look like this:

Not all tests pass in this example.

I have used the skip functions from the testthat package to skip tests that have unfulfilled requirements like security credentials.

Even though the coverage.xml was sent to DevOps, there is a warning that “No coverage data found”.

The Cobertura file generated by the current released version of the covr package is not the format DevOps expects.

This is fixed in the GitHub repository and will eventually/hopefully make it into official CRAN mirror.

If this functionality is important, then install the package directly from GitHub.

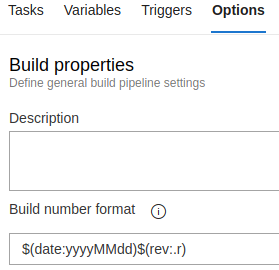

Build numbers

Every time a pipeline runs it gets a (unique) id.

I don’t find the default id particularly readable, so in the “Options” tab I set “Build number format” to be of the form $(date:yyyyMMdd)$(rev:.r).

Reuse

To reuse this test setup in for other R package in the same DevOps project the tasks can be collected in a task group by selecting them (hold down Ctrl and click the tasks) and right click to get this menu:

The saved task group is now available when creating another pipeline.

Alternative run?

When creating this pipeline I tried to skip the step where I copy test-results.xml and coverage.xml from the stopped container to the host.

My idea was that a container can mount a folder on the host when it runs to ease exchange of files between container and host.

That is, I would change the run command to something like

docker run -v $(Common.TestResultsDirectory):/home/shiny/test test:latest

Any file in /home/shiny/test in the container should then also appear in the folder $(Common.TestResultsDirectory) on the host (a build variable).

While this works on My Machine(TM) the hosted machines do not allow this and when testthat tries to write an xml file it fails:

Error in doc_write_file(x$doc, file, options = options, encoding = encoding) :

Error closing file

Calls: … -> write_xml.xml_document -> doc_write_file

In addition: Warning messages:

1: In doc_write_file(x$doc, file, options = options, encoding = encoding) :

Permission denie [1501]

2: In doc_write_file(x$doc, file, options = options, encoding = encoding) :

Permission denie [1501]

Debugging on hosted machines are difficult, so I have not pursued this very far.